Maximizing GPT-3.5 Turbo's Potential through Fine-Tuning

OpenAI's GPT-3.5 Turbo is a major leap forward in the development of large language models. As an extension of the GPT-3 series, GPT-3.5 Turbo excels in generating human-like text while being more cost-effective and accessible than its predecessors. However, its true strength lies in its ability to be customized through fine-tuning.

The Importance of Fine-Tuning Language Models

Fine-tuning is essential for fully leveraging the capabilities of large language models like GPT-3.5 Turbo. Although these models can produce highly human-like text by default, their full potential is realized when they are fine-tuned. This process enables developers to adapt the model to specific domains by training it on specialized data, extending its capabilities beyond general-purpose functions.

Tailoring for Specific Applications

Through fine-tuning, developers can tailor the model to meet the needs of particular domains, creating unique and specialized experiences. By using domain-specific data for training, the model can produce outputs that are more accurate and relevant to that niche, enabling businesses to develop customized AI applications.

Enhanced Control and Consistency

Fine-tuning enhances the model's ability to adhere to instructions and maintain consistent output formatting. By training the model on structured data, it becomes better at following the desired format and style, leading to more reliable and predictable results.

Boosted Performance

Fine-tuning can significantly improve the model's performance, allowing GPT-3.5 Turbo to match or even surpass GPT-4 in specific specialized tasks. By optimizing the model for a particular domain, it achieves better outcomes in that area compared to a generalist model. The performance boost from fine-tuning is considerable.

Step-by-Step Guide to Fine-Tuning OpenAI GPT-3.5 Turbo

- Access platform.openai.com

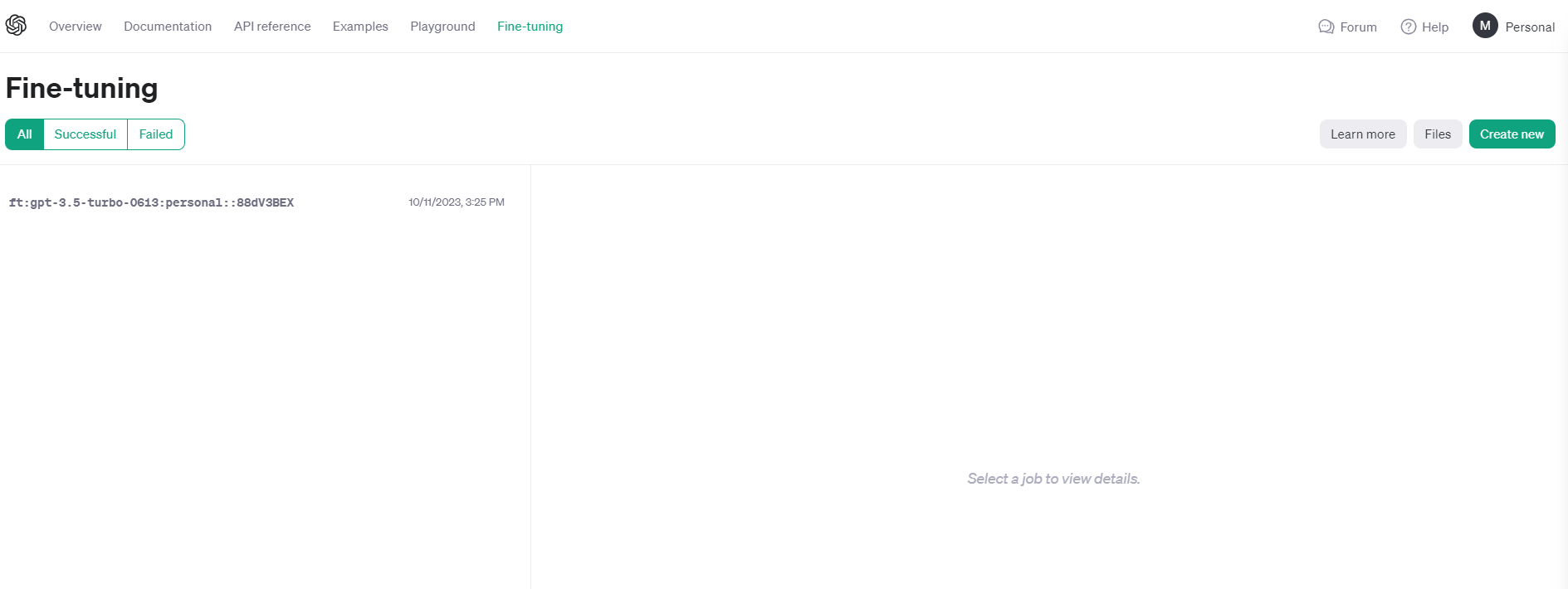

Begin by logging into the OpenAI platform, where you'll find the fine-tuning interface as shown below:

- Prepare Your Dataset

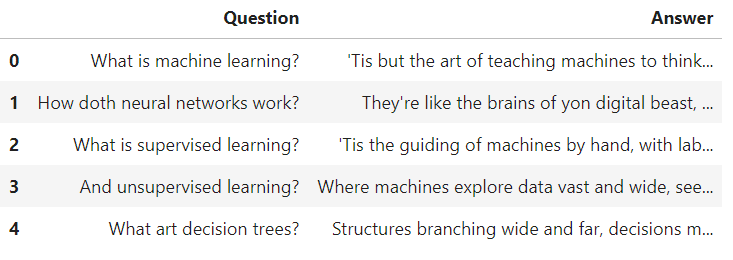

Next, you'll need to prepare your dataset for fine-tuning. Below is an example of what your input data might resemble:

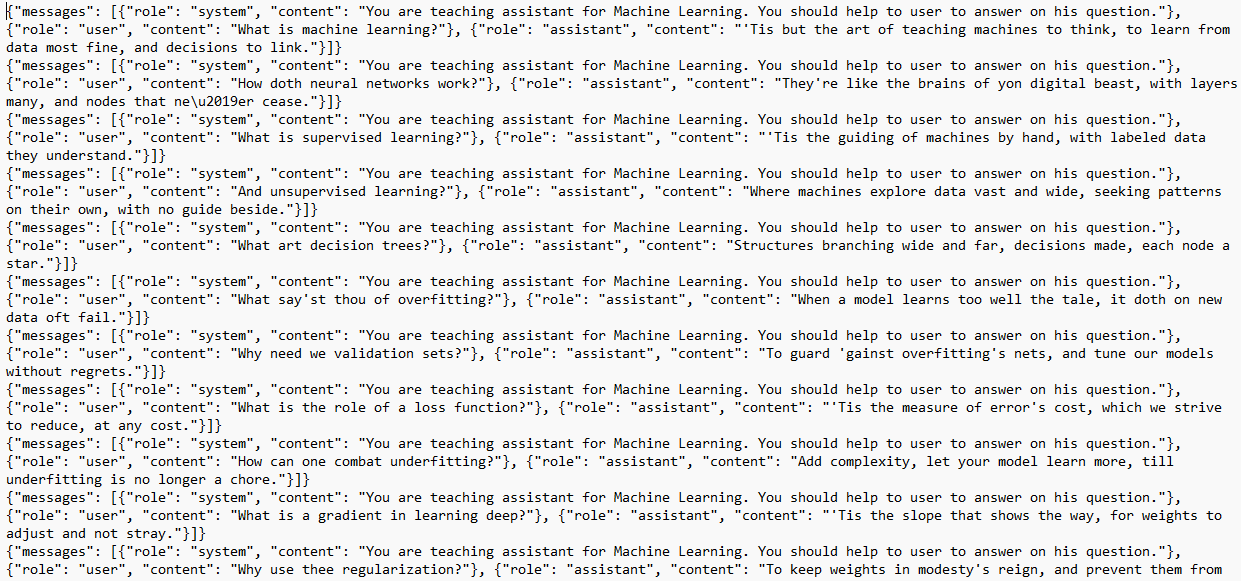

The data must be converted into JSONL format. After conversion, your JSONL file should appear like this:

- Initiate the Fine-Tuning Job

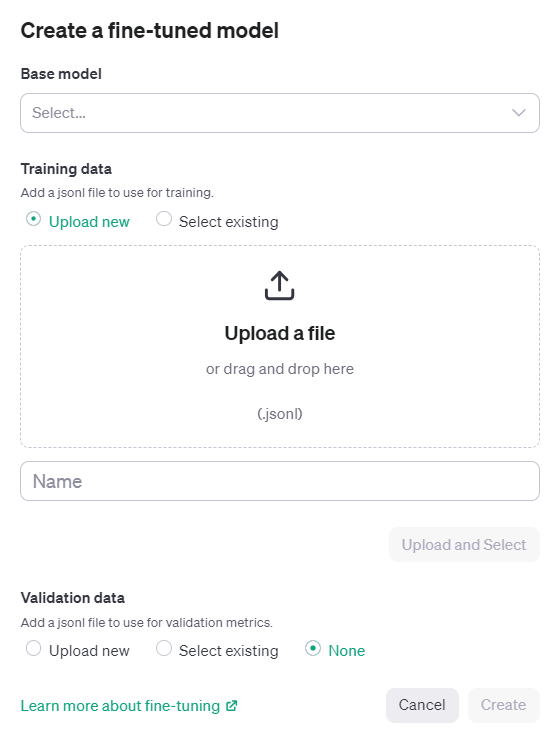

Once your data is ready, you can initiate a new fine-tuning job. The interface for uploading your JSONL file looks like this:

- Utilizing the Fine-Tuned Model

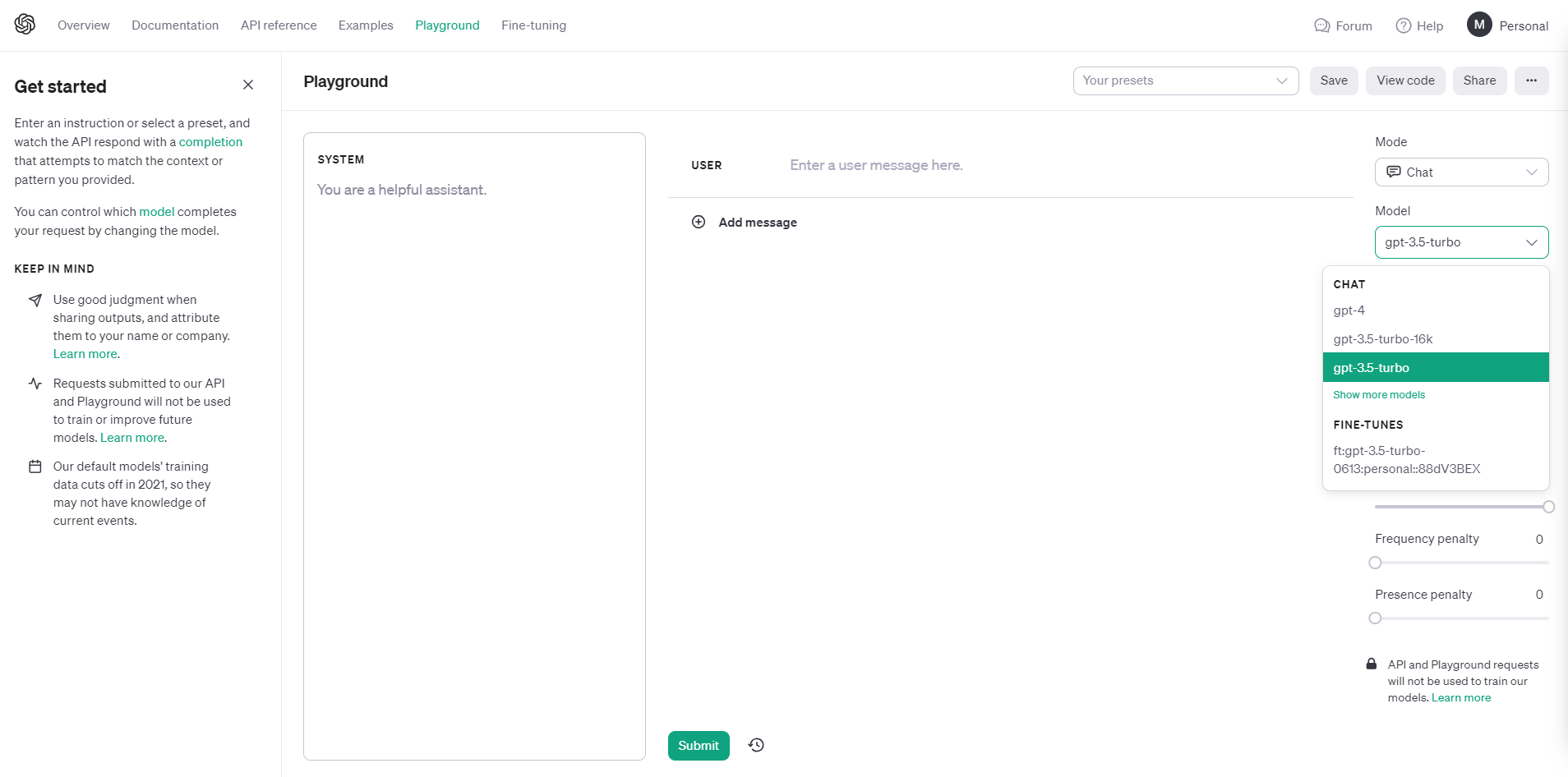

After fine-tuning, you can select the model for use in your projects. The model selection interface is shown below:

Conclusion

As we continue to explore the capabilities of large language models, GPT-3.5 Turbo stands out not only for its ability to generate human-like text but also for the potential unlocked through fine-tuning. This process enables developers to refine the model's skills for specific applications, achieving results that may even surpass those of its successors in certain specialized areas.

Simplify Your Fine-Tuning Process with Easyfinetune

At Easyfinetune, we specialize in creating tailored, high-quality datasets for fine-tuning LLMs like GPT-3.5 Turbo. Our expertise in dataset curation can significantly streamline your model customization process.

To help you get started with fine-tuning, we offer a free tool that allows you to quickly create JSONL datasets. This tool is designed to simplify the data preparation step, enabling you to focus on fine-tuning your model for optimal performance.

Ready to create your own JSONL dataset for fine-tuning? Try our free tool now:

Whether you're new to fine-tuning or looking to enhance your existing models, our JSONL dataset creation tool and custom dataset services can help you achieve your AI development goals more efficiently. Explore the potential of fine-tuning with EasyFineTune and take your language models to the next level.